The hype around AI has reached a fever pitch. Visit nearly any popular medical website. You are bound to see writing that says AI is “revolutionizing” healthcare, “transforming” diagnostics, analyzing “complex medical imaging,” and providing “cutting-edge” care. But where is this happening? In the hospitals where I work, I have yet to see a radiology report mentioning that it was created with AI or a diagnosis (or even a differential!) suggested to me by lab studies.

One is more likely to see machine learning used at a third-party data analytic company that contracts its services rather than the hospital or clinic. Medicine is notoriously a slow adopter and this will continue with AI. The regulations and bureaucracy are complicated, and, frankly, there is a lack of knowledge about how AI works and its use cases. If we are not careful or aware, phrases like “machine learning” and “artificial intelligence” will become buzzwords, just like “innovation.”

For the hospitals that wish to use artificial intelligence and its subdomains, several hurdles must be overcome: from hiring people who have expertise to having enough structured or organized data for a program to learn, to top-notch cybersecurity, to knowing what kind of questions to ask and problems to solve. Unless the foundation is established early, it is hard for me to see how AI will make a dramatic impact at your typical hospital (i.e. not a top-notch academic medical center with billions in endowment).

Here are some challenges with AI that we face:

Adversarial Learning

The first major challenge with implementing AI is not getting hacked, which is easier said than done. Every year, we see examples of large institutions being breached (like here, here, and here). Unfortunately, these cases demonstrate that it is often not a matter of if but when. Cybersecurity is an arms race. As hospitals improve their protection, bad actors race to beat it. Ironically, the AI systems that a hospital hopes to guard might succumb to machine learning algorithms designed to compromise them. This technique is called adversarial learning.

Researchers have revealed machine-learning spam filters that can learn which words to use to bypass other machine-learning spam filters. Rather than steal healthcare data, a hacker may decide to contribute poisoned data to a training set, affecting the outputs of a machine learning algorithm. For the fans of computer vision, researchers have shown that altering even one pixel on an image, undetectable to the human eye, can cause a deep learning algorithm to reclassify an image. Imagine what that may do to an algorithm looking for cancer on an X-ray or CT scan.

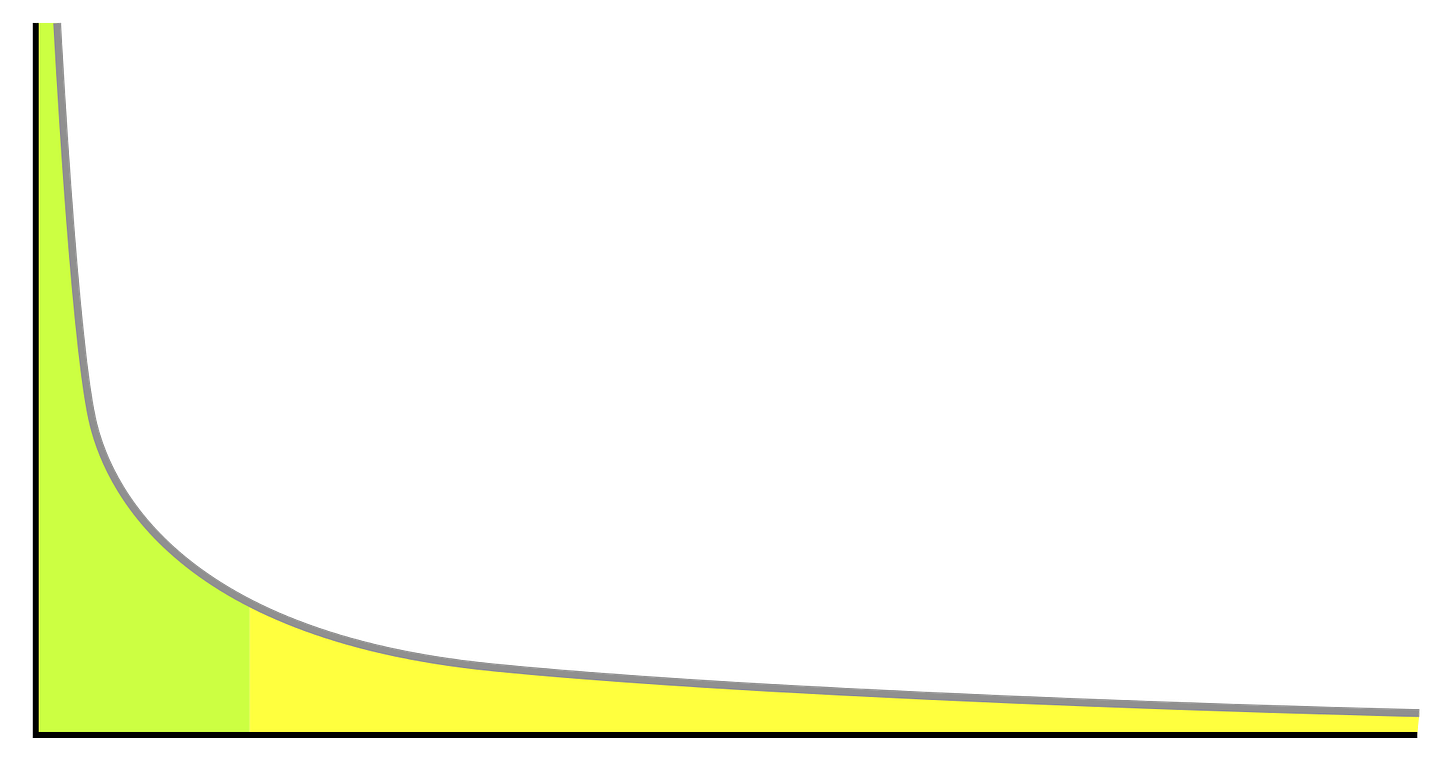

Long-Tail Problems

In statistics, the long-tail phenomenon represents a distribution of high-frequency events (say common medical diagnoses like diabetes, CAD, UTI, pneumonia), which are followed by low-frequency events (the “zebras”) that decrease asymptotically as one moves further along the graph. However, in daily medical practice, it is not difficult for humans to diagnose the common problems that occur every day at clinics and emergency departments.

The challenge is when we must manage rare diseases that elude easy diagnosis. In these cases, the machine-learning algorithm does not have enough data to train. How much data is required to reach the high accuracy demanded in medicine is unknown. The performance of each algorithm is unique to the problem it is trying to solve and the algorithm needs refinement over many iterations and some number of epochs. For the rarer conditions, where AI might be life-saving and cost-effective, we may not have enough data to develop accurate training and test data sets. This is exacerbated by healthcare’s long-standing interoperability problem, where organizations refuse to share information for many reasons.

Value Alignment Problem

The value alignment problem, or AI alignment, represents the challenge for AI programmers to ensure that the systems they create align with the interests of humans. Will the AI act to advance human objectives? Is it learning or making inferences that we want it to? Or is it taking dangerous shortcuts to achieve its objective that may discount important information?

An example of an AI alignment problem is how large language models train on datasets that may be biased or wrong. Just think of how Chat-GPT might output falsehoods because it learned from information containing popular misconceptions. Another major challenge is scalable oversight, which is a method to supervise an AI system that outperforms humans. The oversight must be reliable so that we are not deceived into making harmful decisions because the AI developed a power-seeking strategy through reinforcement learning, which incorporates “rewards” to drive an algorithm to improve its output.

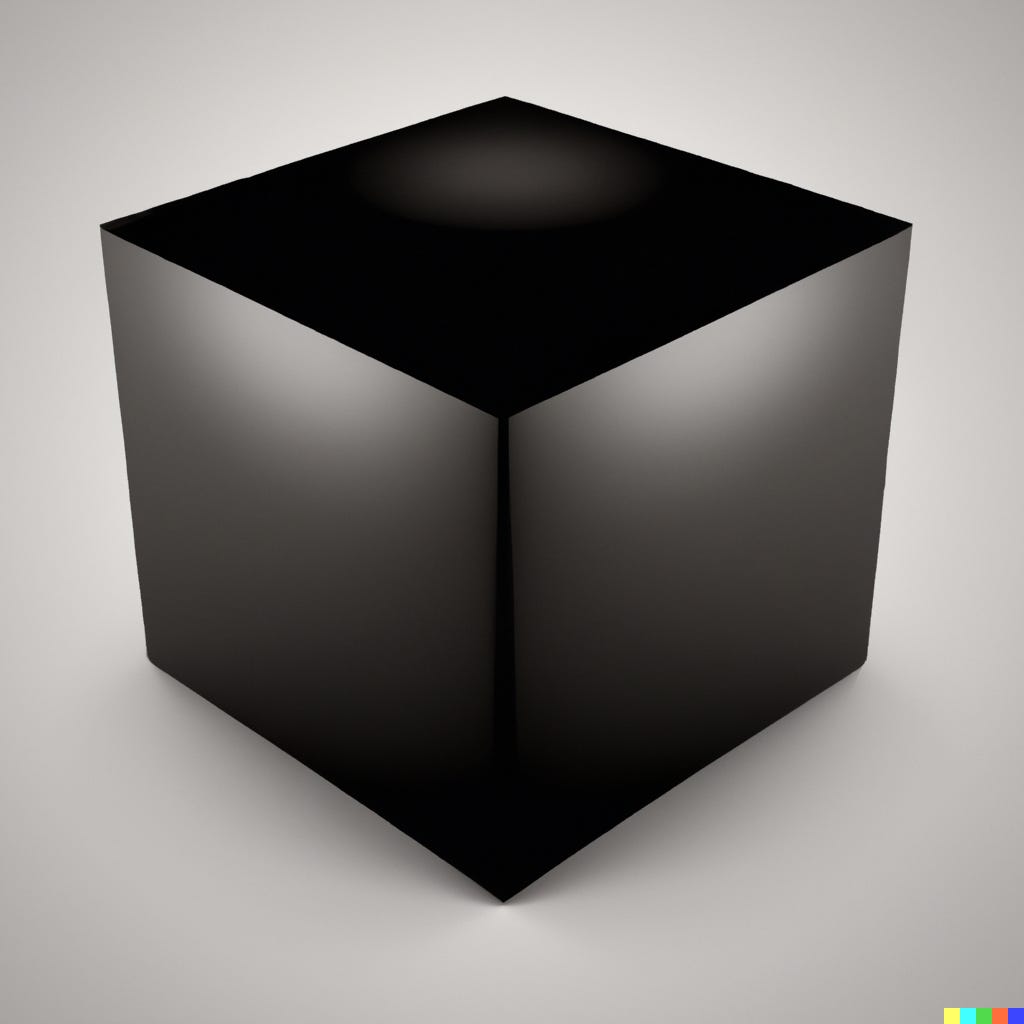

Black Box

Another central question that makes medical researchers and data scientists wary is the black box problem. Deep learning algorithms process inputs or incoming data and produce outputs or solutions. In the invisible layers, the algorithm computes and analyzes thousands to millions of calculations and inferences. Unfortunately, these interconnected equations make it practically impossible to determine how the system derived its conclusion. So if the algorithm makes an error, it will be difficult to explain why. Even worse, we may not recognize the error until someone gets hurt.

One solution is to slow down the speed at which we use AI in medicine to make decisions (which will likely be the case). The second solution is to create AI systems that are transparent, also known as explainable AI (XAI). These systems help humans retrace the steps the algorithm uses to derive its conclusions, which in turn may help mitigate many of the issues on this list.

Overfitted Data

A prominent critique we often see asked about medical research is whether or not the conclusions are generalizable. Overrfitted data occurs when a machine-learning algorithm becomes so attuned to the training data, perhaps because it overtrained, that it cannot generalize insights and solutions with the test data.

The ramifications of overfitting matter because training machine-learning algorithms requires time, energy, and money. If a health system caters to different populations across many states, training data for each group may require the algorithms to be trained from scratch each time. Are organizations prepared for this?

Bias

As you may have realized by now, some big problems in AI usually come down to the training data available to prepare the deep learning algorithms. Because systemic bias has historically (and presently) been a challenging obstacle to providing inclusive and optimal care in medicine, it is not a surprise to see healthcare disparities compounded in AI research. Whether due to race, gender, geography, sexual orientation, or disability, as alluded to earlier, there isn’t enough good data because healthcare systems are not interested in sharing them with their competitors.

As a result, we see algorithms that may perform worse in diagnosing skin cancer on darker skin, chest X-rays that are not as accurate on underrepresented genders, or misclassifying breast cancer in disadvantaged populations. Future questions include whether or not AI models should be continuously updated as new data is available or if specific AI models should be trained for certain populations. Each has a risk of introducing a different type of bias depending on the data. Additionally, how much government regulation is required? With some states banning diversity, equity, and inclusion efforts, this might hamper efforts of reducing bias, not just in AI, but healthcare in general.

Transfer Learning

Finally, one of the challenges we face in AI is transfer learning, which is when knowledge derived by an algorithm from one task can be applied to another related task. For example, a model that trains to detect cancers on CT scans can subsequently work with MRIs. If we apply an algorithm that detects abdominal cancers on CT scans to one that finds lung cancers on CT scans, this is known as domain adaptation, which is a subcategory of transfer learning.

Transfer learning has many benefits, including reducing training time, improving algorithm performance, requiring less data, and enhancing cost savings. Additionally, many AI researchers believe that transfer learning is necessary if we wish to develop artificial general intelligence, also known as strong AI, which is essentially an autonomous computer program that can meet or outperform human performance at many tasks.

Whether we can (or should) create programs at this level is hotly debated. Currently, we have artificial narrow intelligence, or weak AI, which can only execute specific, focused tasks that it trains for. Even ChatGPT, despite demonstrating conversational skills in different domains, is an example of narrow AI. We can trick it into giving wrong answers and it can only retrieve answers and formulate responses on the dataset that it has trained on. Despite its impressive use cases, ChatGPT won’t drive a car, order your groceries on the Internet, or even correctly cite research articles from the Internet.

Conclusion

While AI is an exciting field and deserving of research and study, while I would love to see it deliver on its promise of detecting and curing diseases, reducing the cost of healthcare, and improving people’s lives, we must walk a fine line between cautiousness and optimism. Of course, we should look to expand the capabilities of medicine and technology, but we must also develop a strong foundation to build upon.

We must improve healthcare interoperability and reduce systemic bias so that not only can we have diverse and robust datasets to train our algorithms, but we ensure that underserved patients can get the traditional care they have been lacking. We must update aging hospital systems to have the latest cybersecurity systems. We must work to improve the accessibility and affordability of medical care. The inefficiencies produced by AI in healthcare will only worsen when applied to an already inefficient healthcare system. If we want to make all the effort placed into advanced technologies worth the time and cost, let’s get the basics right first.